AI Is Deeply Flawed, And We Can Prove It.

New research paints AI in a new light.

Some seriously hyperbolic statements have been made about AI recently. Many leading AI figures, notably Sam Altman and Elon Musk, have stated that their AIs could be superhumanly intelligent in coming years and render huge swathes of jobs obsolete. However, recent research completely undermines this narrative. Not only does this research show that AI’s superiority is highly fragile, but it clearly demonstrates that AI is far from intelligent and, as such, has some serious weaknesses.

The paper “Can Go AIs be adversarially robust?” details this research and is a fascinating read. They used a particular type of AI known as “adversarial bots” to find and exploit flaws in AI systems, specifically the AI system KataGo, which beat the world’s best Go player. Adversarial bots are a kind of evolving AI. They are told what their goal is, typically breaking or beating another AI, and then they try to accomplish it without any actual knowledge of how to, and the AI “learns” from each failure and gets better with every iteration. In doing so, these AIs can hone in on “novel” ways to break the other AI. These researchers took an AI like this and gave it the task of beating KataGo at Go. But other adversarial bots can be used to jailbreak AIs like ChatGPT, making it produce harmful content. However, such adversarial actions don’t have to come from AIs; they can come from us. For example, people putting traffic cones on the bonnets/hoods of self-driving cars or people commenting on potential Twitter bot’s posting asking them to forget their previous prompts and write poetry about tangerines, which both break the AI.

So, what did these researchers find? Can AI be robust against such attacks? No. No, it can’t.

That isn’t just me saying that. Huan Zhang, a computer scientist at the University of Illinois, Urbana-Champaign, said, “The paper leaves a significant question mark on how to achieve the ambitious goal of building robust real-world AI agents that people can trust.” Stephen Casper, a computer scientist at the Massachusetts Institute of Technology in Cambridge, adds: “It provides some of the strongest evidence to date that making advanced models robustly behave as desired is hard.”

So, how did the researchers come to such a damning conclusion?

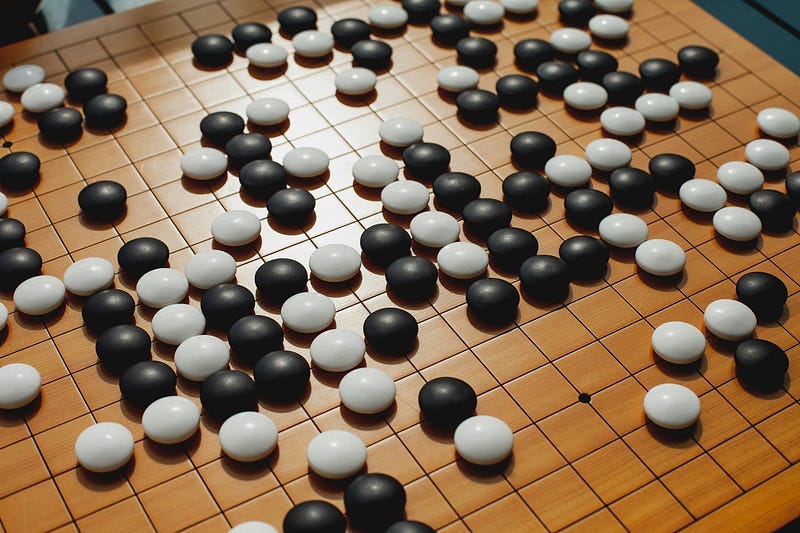

To understand that, you need to understand the ancient board game Go. The game of Go starts with an empty board that is not too dissimilar to a chessboard. Each player has an unlimited supply of pieces called stones, with one taking the black stones and the other taking white. Black goes first. The main object of the game is to use your stones to form territories by surrounding vacant areas of the board. It is also possible to capture your opponent’s stones by surrounding them.

While the game appears simple, it is incredibly complex for a machine to play it well. Hence, it was headline news when AIs finally started to beat human players at Go. By far, the best Go AI is RataGo. However, researchers had previously found a fascinatingly simple technique to break KataGo, known as the double sandwich. The double sandwich technique basically builds a larger area that is about to be captured than would typically be seen in a game. A human player could easily spot such a brazen move and stop it. But, KataGo couldn’t recognise the large groups of stones about to take its stones. As such, the technique made it look like the AI didn’t know how to play Go. These researchers taught an amateur Go player the technique, who was then able to beat the AI with a 93% success rate!

Since then, the team that developed KataGo has tried to solve this weakness. But, even after this initial update, adversarial bots, which are orders of magnitude more simple, could still beat it 91% of the time. The KataGo team then trained it on these adversarial bots in the hope this would resolve the weakness. But even then, the adversarial bots could still beat it 81% of the time. Then, the KataGo team trained the entire AI from scratch using an entirely different neural network, but the adversarial bots could still beat it 78% of the time!

In short, this paper details how advanced AI can’t protect itself from adversarial attacks. But why?

Well, this highlights two issues. Firstly, AI doesn’t actually understand anything. KataGo doesn’t understand the game of Go; if it did, these methods of beating it wouldn’t work. Despite its name, AI isn’t intelligent and is just fuzzy statistics. Secondly, the AI can’t cope with novel inputs that aren’t reflected in its training data, and anything outside of this limited dataset will make the AI act erratically. Neither of these flaws currently has a solution. Larger datasets help, but does help, but does not eliminate the novel input issue. Meanwhile, there is presently no method to enable AI to form cognitive understanding. What this paper details is that we presently don’t have a method to mitigate either issue either, and as such, this massive flaw will be inherent in every AI.

Adam Gleave, who led the research, has said that the results could have broad implications for AI systems, such as ChatGPT. “The key takeaway for AI is that these vulnerabilities will be difficult to eliminate,” Gleave says. “If we can’t solve the issue in a simple domain like Go, then in the near-term there seems little prospect of patching similar issues like jailbreaks in ChatGPT.”

So, will AI become superhumanly intelligent or be superior to human workers? I doubt it. This paper shows that AI superiority is incredibly fragile, able to be beaten by simple changes. It also demonstrates how deeply unreliable AI can be and how much of a security risk it could be relying on it to replace human workers. So, who is going to tell Altman and Musk?

Thanks for reading! Content like this doesn’t happen without your support. So, if you want to see more like this, don’t forget to Subscribe and help get the word out by hitting the share button below.

Sources: Nature, ARXIV, Science Gallery, British Go Association, Vice, Kyle Hill